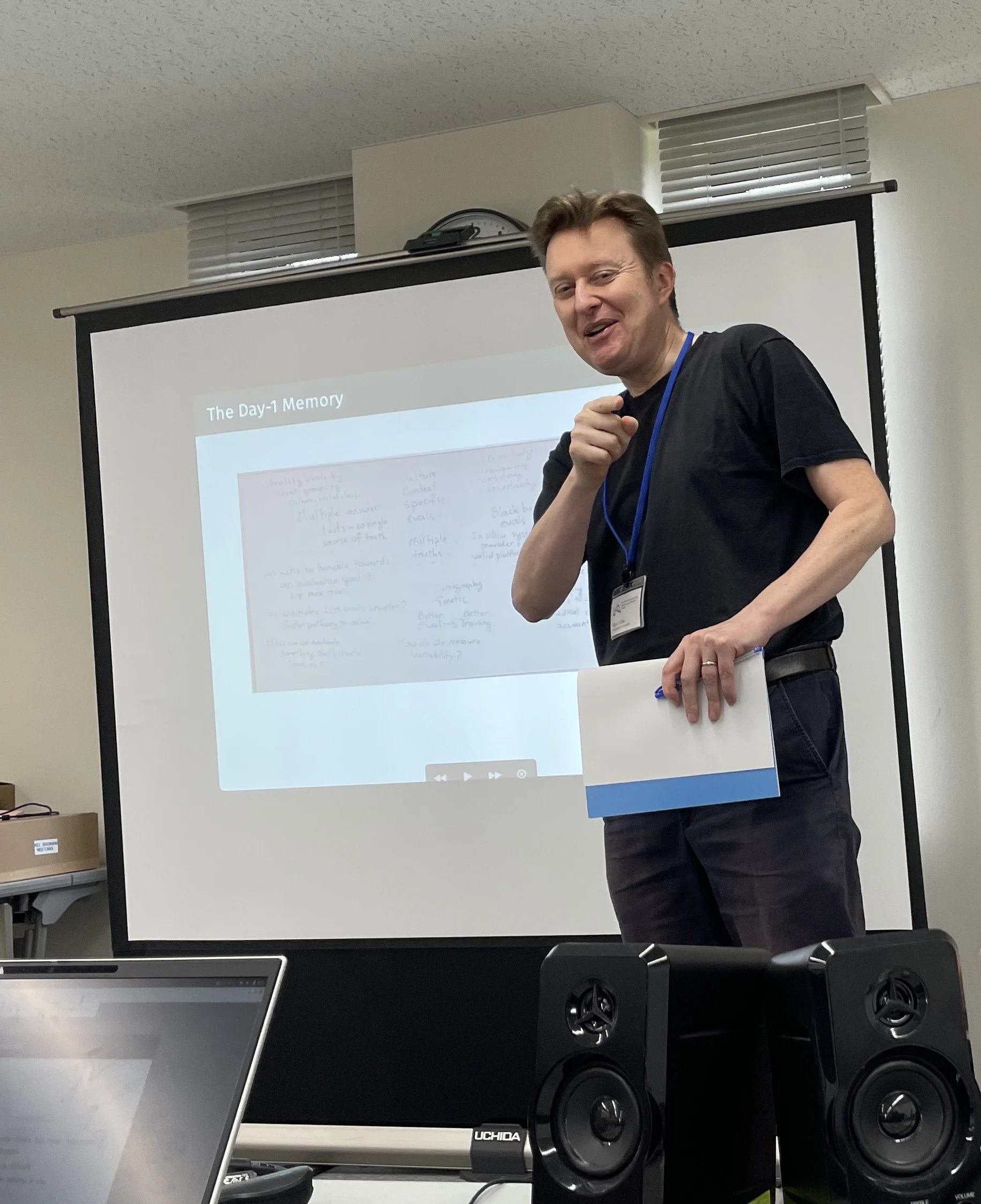

Nigel H. Collier

I’m Professor of Natural Language Processing at the University of Cambridge and Chief Scientist of Trismik. My work focuses on creating better AI systems with an emphasis on uncertainty, personalization, and adversarial assessment of model behaviour.

Reflections

Occasional opinion articles exploring humanistic perspectives on AI.

I hope you enjoy these essays and please share your reflections on LinkedIn. I try to read as many comments as I can although I may not be able to respond personally. Thoughtful, constructive, and considerate perspectives are always appreciated.

Spotlight

Short notes on the latest published works from our team at Cambridge and partners

UNCLE: Benchmarking Uncertainty Expressions in Long-Form Generation

Large language models often sound confident—even when wrong. This study benchmarks how they express uncertainty, helping researchers design models that reason, and admit doubt more like people do.

SIMBENCH: Benchmarking the Ability of Large Language Models to Simulate Human Behaviors

SimBench sets a new standard for evaluating AI as a mirror of human behaviours, uniting 20 diverse datasets to reveal when model simulations succeed, fail, and why that matters.

Conformity in Large Language Models

This study exposes how AI models often ‘follow the crowd,’ mirroring social conformity. Understanding and correcting this helps build systems that think independently and better reflect human diversity.

500xCompressor: Generalized Prompt Compression for Large Language Models

This work demonstrates a major gain in large-language-model efficiency: prompts can be compressed up to 500× with minimal accuracy loss, increasing speed and efficiency without retraining.

Generative Language Models Exhibit Social Identity Biases

LLMs often reflect ‘us vs them’ biases ingrained in human data favoring in-group members and dismissing others. Recognising and curbing these tendencies is vital to build fair, inclusive AI systems.

Trident: Benchmarking LLM Safety in Finance, Medicine, and Law

Trident explores how safely large language models operate in finance, medicine, and law, revealing high stakes domains where today’s AI still falters, and helping society build more trustworthy systems for critical decisions.

Research

Published articles and pre-prints from our team and partners

Meet the Team

-

Yinhong Liu

Evaluation, Calibration, and Alignment

-

Meiru Zhang

D4, Event Extraction and Forecasting

-

Tiancheng Hu

D3, Personalization and Social Simulations

-

Chang Shu

D3, Reasoning and Alignment

-

Caiqi Zhang

D3, Uncertainty and Factuality

-

Yinjiang River Dong

D2, Personality and Personalization

-

Zongqian Li

D2, Efficiency and Multimodality

-

Sanhanat Sivapiromrat

D2, Safety and Alignment

-

Auss Abbood

D2, Digital Disease Surveillance

-

Paul Martin

D2, Modular and Efficient Deep Learning

-

Zack Hui

D1, Safety and Alignment

-

Ehsan Shareghi

Affiliated Lecturer

-

Zaiqiao Meng

Affiliated Lecturer

-

Zihao Fu

Affiliated Lecturer

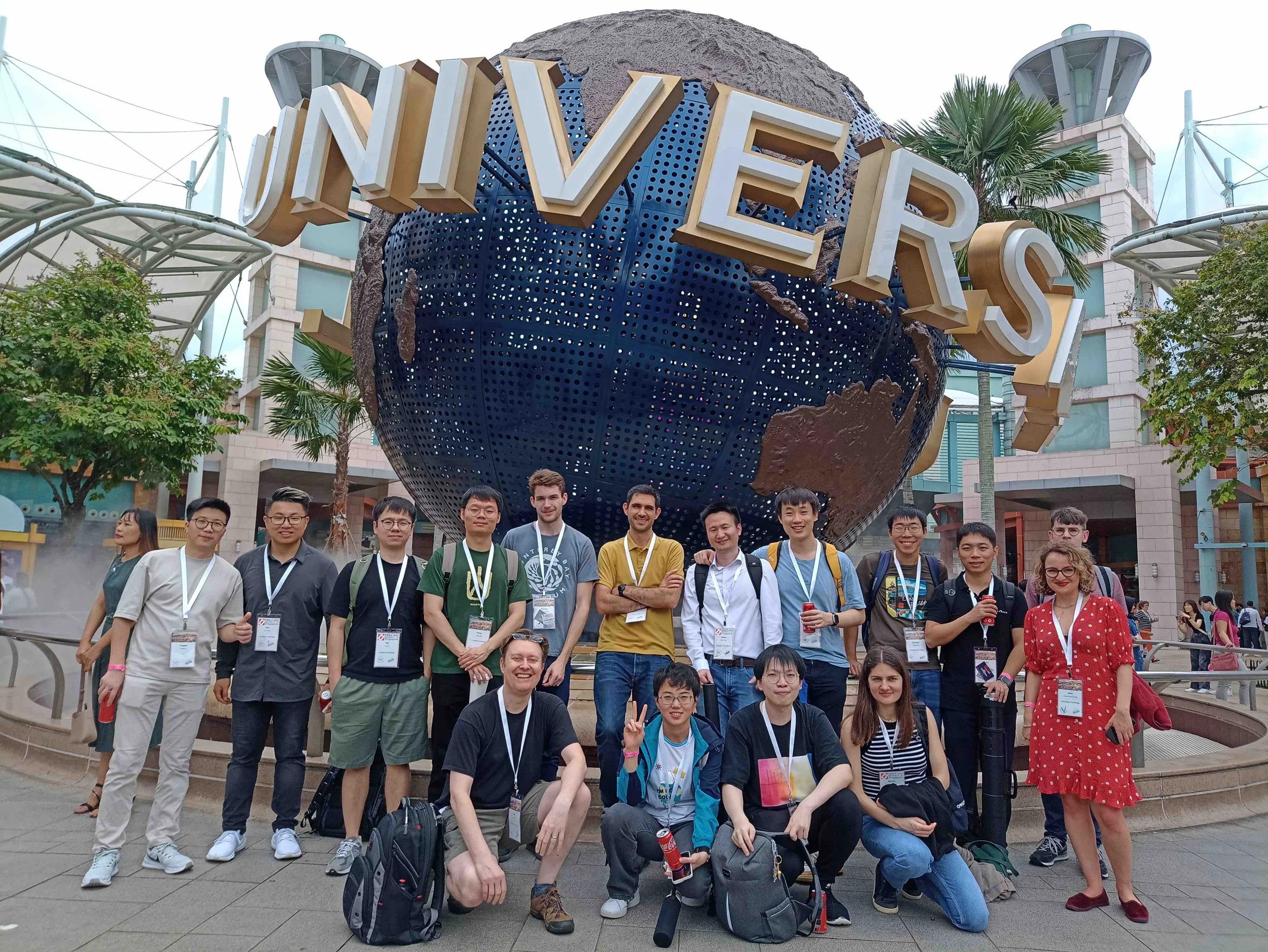

Our team is part of the Language Technology Lab (LTL) at Cambridge. LTL investigates computation, cognition, and language through technically rigorous, experiment-based NLP research. We value intellectual curiosity, collaboration, and precision and welcome applicants ready to engage deeply with challenging ideas.

Prospective PhD students: I am always interested to supervise new NLP projects on the PhD in Computation, Cognition and Language. Before contacting me please make sure that you meet the minimum requirements and take time to check out my publications. The work we do in my team is technical and experiment-based so please apply only if you have strong programming skills. In your email please send a CV with a brief statement of research interests. Please note the application deadline and documents you need to submit with your application. For 2026 applicants: I will accept 2 or 3 PhDs in 2026, and also the MPhil by Research in language sciences offers places to applicants with an NLP background. This can be a great springboard to PhD research.

Bio

I have been working in NLP and AI for over 30 years. Before joining the University of Cambridge on an EPSRC Experienced Researcher Fellowship (2015-2020) I spent the early part of my career in Japan (1996-2012). I was a Toshiba Fellow, a postdoc at Tokyo University with Junichi Tsujii and Associate Professor at the (then) newly formed National Institute of Informatics where I led the NLP lab for 12 years before returning to the UK on a Marie Curie Research Fellowship. As an undergraduate I studied for a BSc. in Computer Science at the University of Leeds (1992). I received an MSc in Machine Translation (1994) and a PhD in Computational Linguistics (1996) from the University of Manchester (UMIST) for my research on English-Japanese Lexical Transfer using a Hopfield Neural Network. My current roles are Professor of NLP, co-leading the Language Technology Lab at Cambridge, Professorial Fellow at Murray Edwards College, and also Chief Scientist at Trismik, a spinout I co-founded and which launched in May 2025.